With

Mesosphere's Datacenter Operating System (DCOS), it's possible to develop

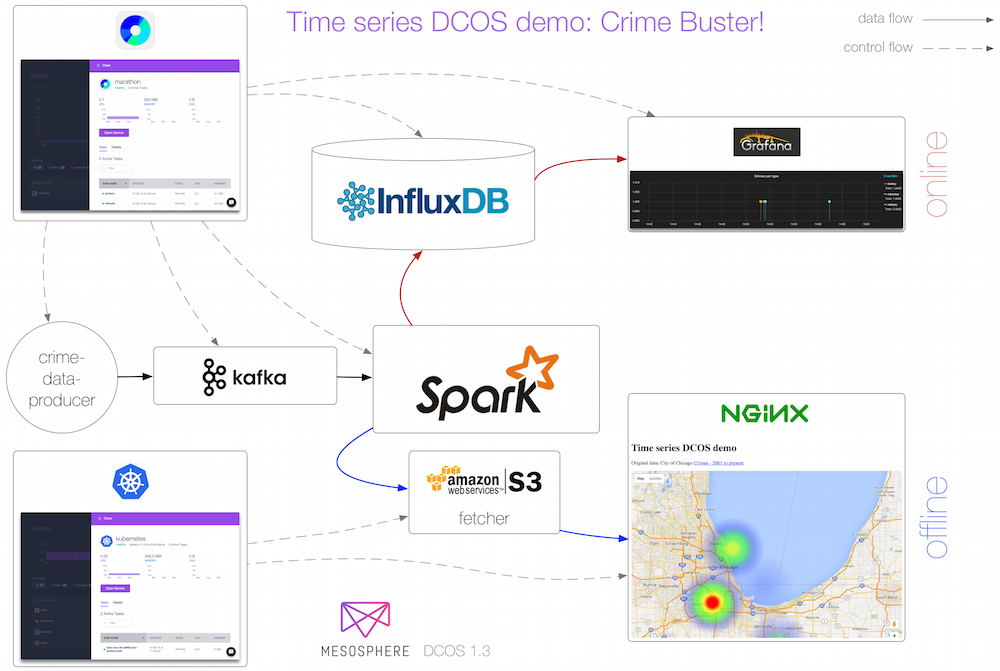

and deploy a non-trivial, production-ready distributed application in a matter of days. We were able to prove this during a recent company offsite meeting, by creating—in just three days—a crime-mapping app built on a backend of DCOS, Marathon, Kubernetes, Kafka, Spark, InfluxDB and other components.

Trying the same thing without DCOS, one would find herself still installing and configuring the individual components by the time we had completed the entire project.

The mission

Mesosphere recently had its company offsite meeting in Mexico. As part of a hackathon during the trip, my team decided to

build a DCOS demo using real-world crime data. The stories that crime data—in our case

some open data from the City of Chicago—can tell are manifold: from real estate planning to police dispatch, there's a lot of value in having online access to data (which crime is peaking right) as well as offline access (historically speaking, the geographical hotspots for crime in the city).

The team working on the time series demo was Michael Gummelt, Tobi Knaup, Stefan Schimanski, James DeFelice and myself. We had about three days to complete the project, from idea to architecture to implementation and documentation. At the beginning there was zero codebase—we did not have anything to build off of in the first place.

However, because DCOS allows for rapid iterations and the architecture is rather modular, we were able to get the demo done in time and still enjoy some fun in the sun.

Here is the architecture we developed:

Our underlying goal was to demonstrate and apply as many good practices as possible, such as:

- By using custom Docker images like mhausenblas/tsdemo-s3-fetcher we demonstrated a simple yet effective CI/CD pipeline within DCOS.

- By using Kubernetes we showed how having the web app and the S3 fetcher in a pod is beneficial in terms of data locality.

- By using secrets in Kubernetes we demonstrated how to pass along AWS credentials in a secure manner.

- By using Kafka to feed both the online and the offline part we showed how easily different workloads benefit from a single, reliable data source.

What we learned

The

Spark Streaming process implementation went smooth, with only a few minor issues, such as data ingestion into InfluxDB via its HTTP API and JSON serialization challenges. Our implementation—which has both the online processing part that outputs into InfluxDB and the offline processing part that writes into a pre-defined

S3 bucket, in one single Spark Streaming app—is not considered good practice. We went with it because of the time constraints of the hackathon.

In our demo, AWS S3 is the main link between the Spark Streaming process and the offline reporting web app. It's a handy device and straightforward to use from the consuming, down-stream Web app.

We hit a few bugs with Kubernetes (we used to implement the offline processing part), which are all but one "core" bugs. That is, they are present in Kubernetes itself and are not related to how

Kubernetes is set up in the DCOS:

A learning experience

Within three days, we managed to assemble the codebase, test and deploy a working distributed application, end to end. With DCOS, it's straightforward to implement both the stateless parts as well as the core data pipeline of the app. While we identified some areas for improvement, such as replacing InfluxDB with Cassandra+

KairosDB, we were overall really happy with the outcome.

We'd love to hear from you to learn if you had similar experiences or maybe want to

try out our demo.