The

Mesosphere Datacenter Operating System (DCOS) is a distributed, highly available task scheduler based on Apache Mesos. We have customers running it in production across tens of thousands of machines, and running everything from Docker containers to big data systems. And while our mission is to make the DCOS experience as user-friendly as possible, there are certain aspects that are inherently more difficult.

Installing the DCOS has historically been one of them, but it's one that we've been able to conquer with our new GUI-based installer. This post explains how we built it and how it revolutionizes the DCOS installation experience.

Installation challenge

Installing DCOS has always been a tricky endeavor because every cluster has site-specific customizations that must be translated into configuration files for various pieces of the DCOS ecosystem. These configuration files need to be compiled into a shippable package, and then those packages have to be installed on tens of thousands of hosts.

When you install DCOS, you also need to install a specific role on each host depending on whether that host is a master, a public agent or a private agent, or just simply an agent.

Sure, this whole thing would be simple with Ansible, Puppet or Chef. But you can't ship enterprise software and force your paying customers into using one of these systems over the other. We do, of course, take integration and best practices with these deployment management tools very seriously, but our installer has to work even if our customers don't use them.

SSH-based installation

Mesosphere is a Python shop, so leveraging an existing library to do the SSHing would be fantastic. We vetted the following libraries:

- Ansible SSH Library

- Salt SSH Library

- Paramiko

- Parallel SSH

- Async SSH

- Subprocess (shelling out to SSH)

None of these SSH libraries worked for us. The library was either not compatible with Python 3x or had licensing restrictions. This left us with concurrent subprocess calls to the SSH executable.

This wasn't an option we were particularly fond of: If you've ever seen how much code it takes to make this a viable option, you can understand it's not trivial. Just look at

Ansible's SSH executor class.

Also, our final product is a web-based GUI with a CLI utility. The library we chose had to be compatible with asyncio, which would be the web framework of the final HTTP API.

YAML-based configuration file format

Previous versions of DCOS shipped what our customers know as dcos_generate_config.sh. This bash script is actually a self-extracting docker container that runs our configuration generation library, which builds the DCOS configuration files per input in the config.yaml.

This used to be in JSON format, but we moved it to a more user-friendly YAML format. In DCOS v1.5 we shipped the first version of this new configuration file format. In DCOS v1.6 we made a modification to the format of this file to flatten the configuration parameters so there are no nested dictionaries, further simplifying the process.

The UI

Finally, we built a completely new web-based graphical user interface. Previously, DCOS end-users relied on our documentation to get the configuration parameters in their config.yaml correct. Keeping these parameters up to date meant lots of documentation updates, and users had to make sure they entered them correctly. The new GUI gives our end users constant feedback about the state of their configuration, and we hope to make this experience more dynamic in the future:

The configuration page gives you robust error information:

The preflight process installs cluster host prerequisites for you:

The preflight process gives you real-time preflight feedback across all cluster hosts:

All stages give you real-time status bars:

The postflight ensures the deployment process was successful and that your cluster is ready for use:

You can get a detailed log of each stage at anytime and send this to our support team if you run into problems:

Command line ready

And even though you can deploy DCOS from the GUI, we also include all the functionality on the CLI.

Optional arguments for the CLI are:

-h, --help show this help message and exit

--hash-password Hash a password on the CLI for use in the config.yaml.

-v, --verbose Verbose log output (DEBUG).

--offline Do not install preflight prerequisites on CentOS7, RHEL7 in web mode

--web Run the web interface.

--genconf Execute the configuration generation (genconf).

--preflight Execute the preflight checks on a series of nodes.

--install-prereqs Install preflight prerequisites. Works only on CentOS7 and RHEL7.

--deploy Execute a deploy.

--postflight Execute postflight checks on a series of nodes.

--uninstall Execute uninstall on target hosts.

--validate-config Validate the configuration for executing genconf and deploy arguments in config.yaml

Deployment from the CLI allows you to build the installer into your automated workflows or to use advanced configuration parameters in your config.yaml that are not currently supported by the UI. You can also use the CLI to skip steps that are mandatory in the UI, which is sometimes simply a matter of convenience.

If you choose this route, you'll have to execute a few different arguments to get a fully deployed cluster.

Once you've put your config.yaml and ip-detect script in a sibling directory to dcos_generate_config.sh called genconf/, you can start executing configuration generation and deploy arguments to dcos_generate_config.sh:

Execute configuration validation:

If configuration needs some work:

Execute configuration generation:

Install prerequisites on your cluster hosts:

Execute preflight:

If things are not right in your cluster, preflight will let you know:

Deploy with robust state feedback:

You can also run --postflight to tell you when your cluster has gained a quorum and is in a usable state.

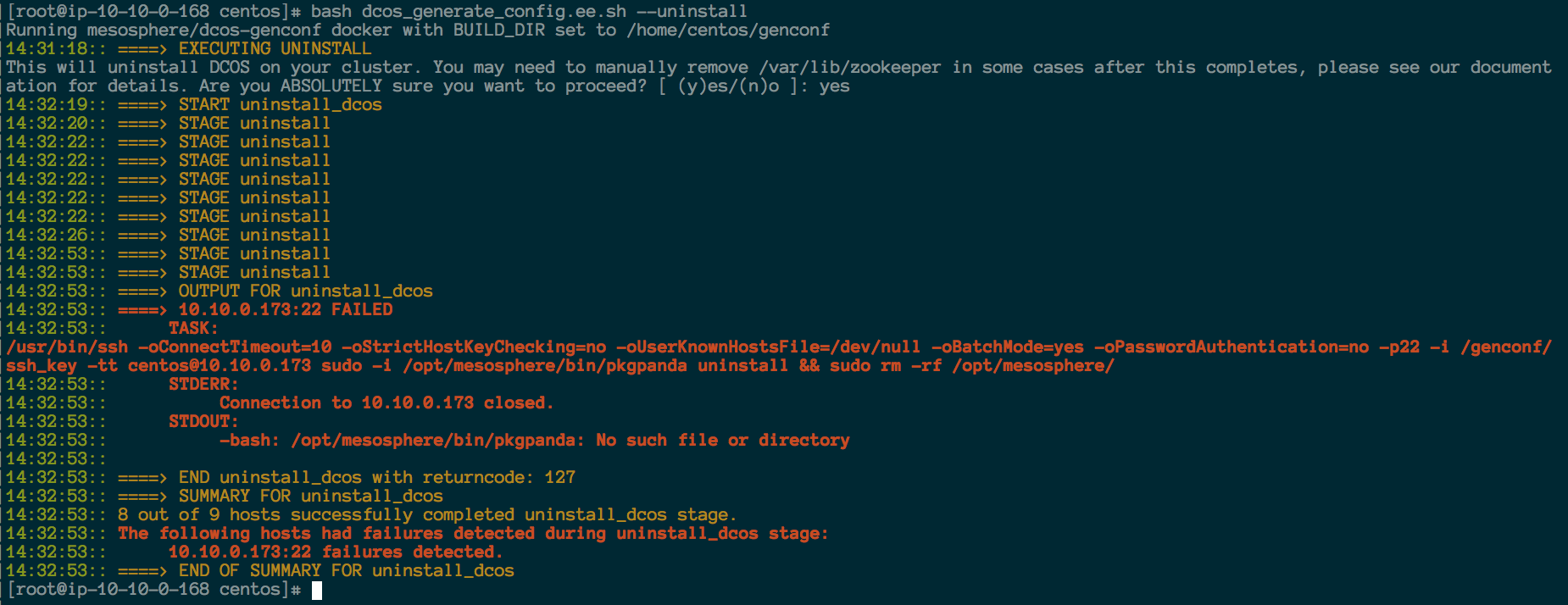

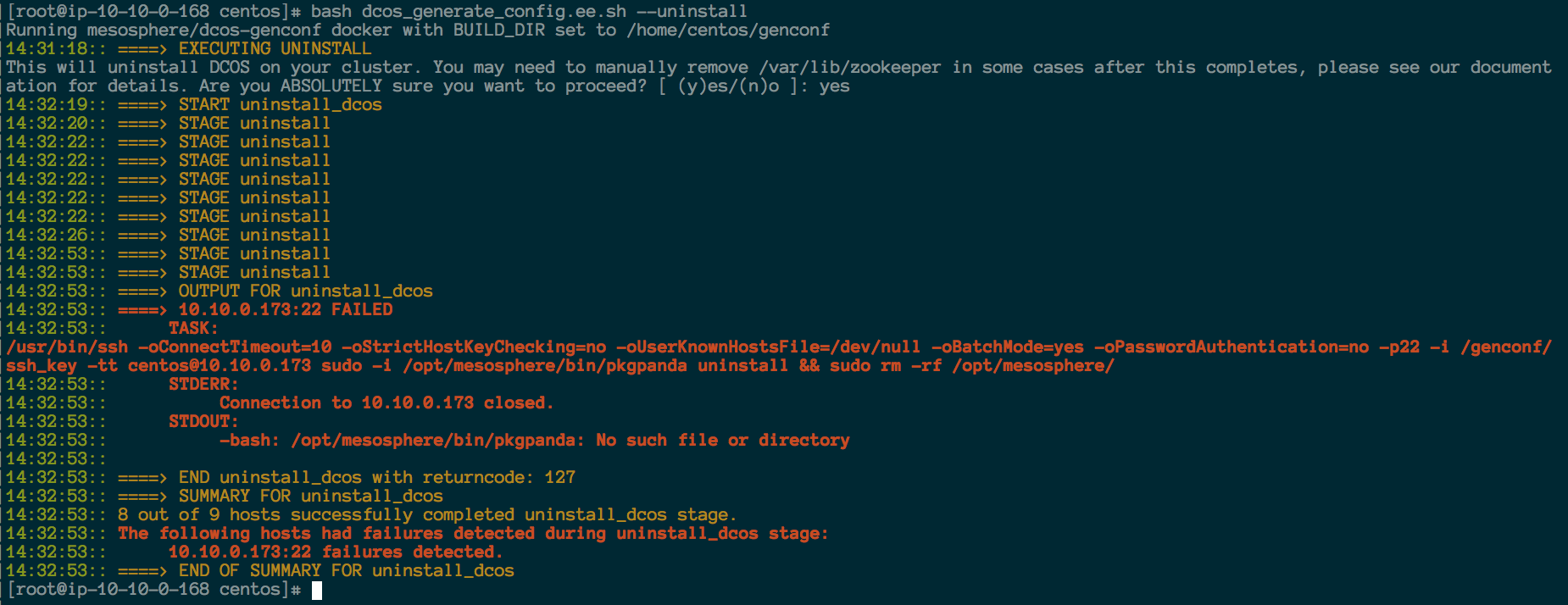

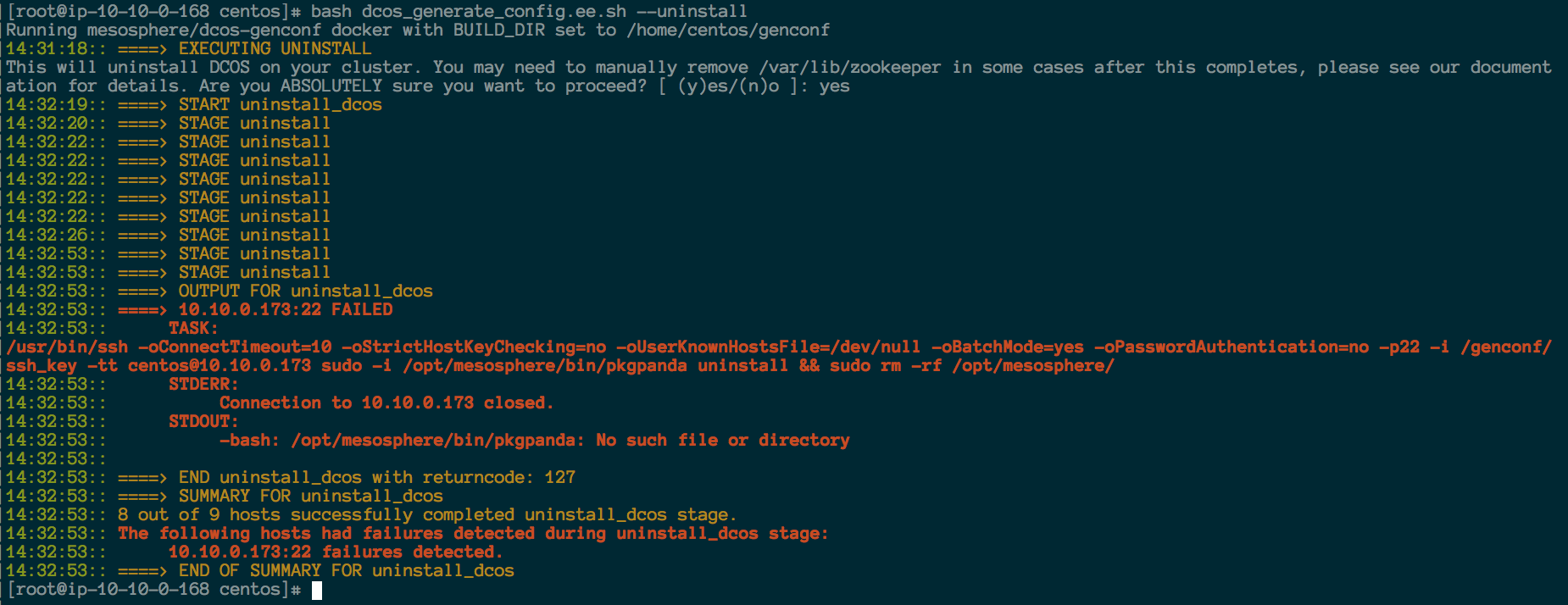

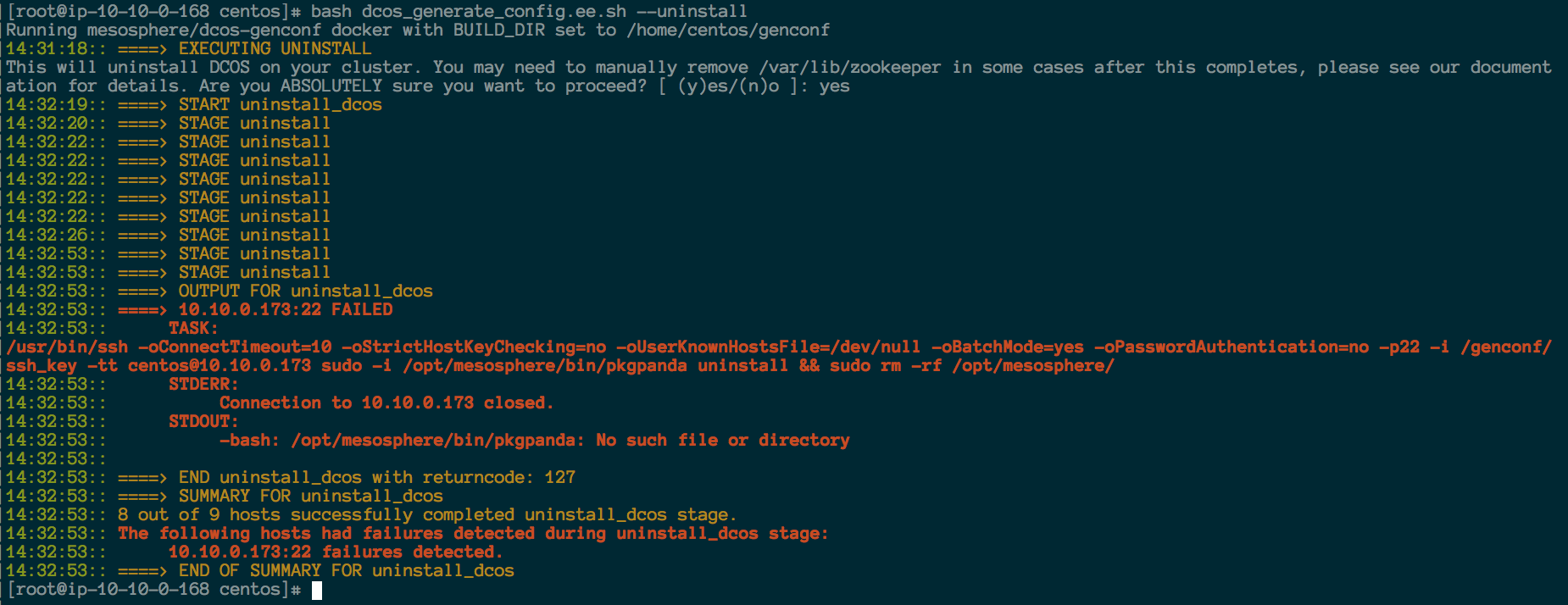

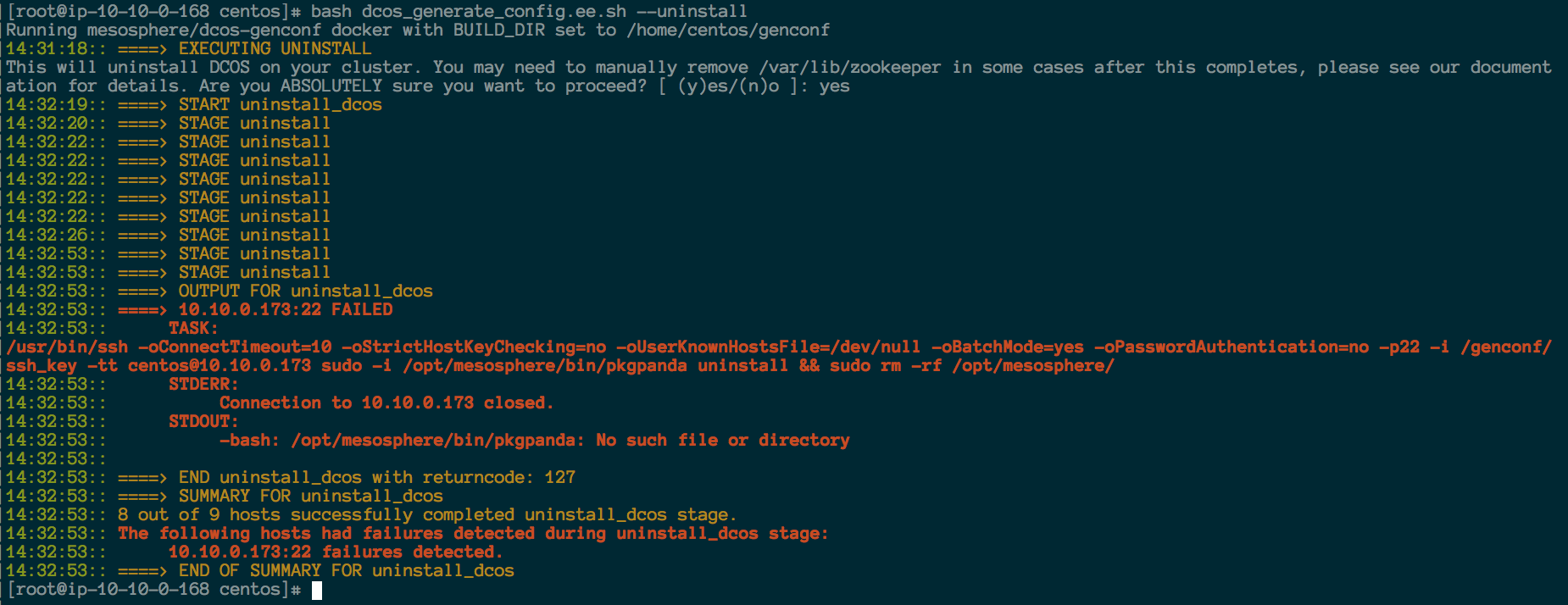

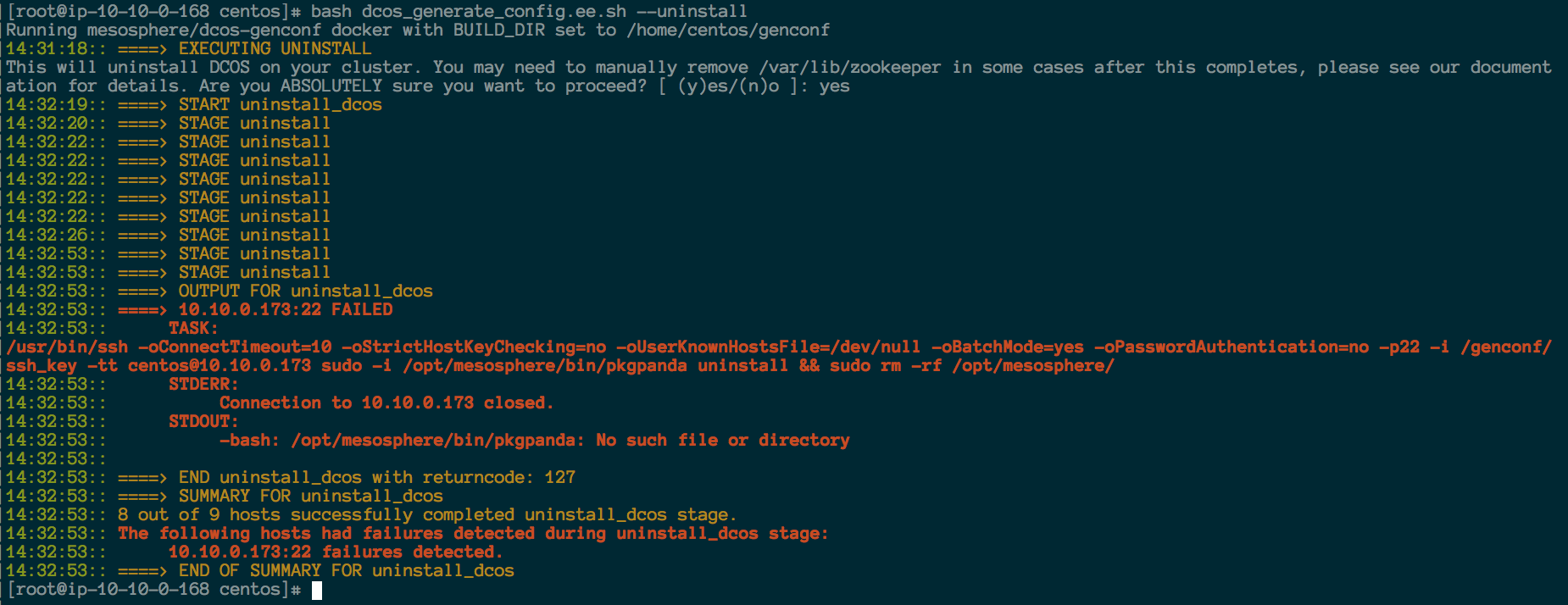

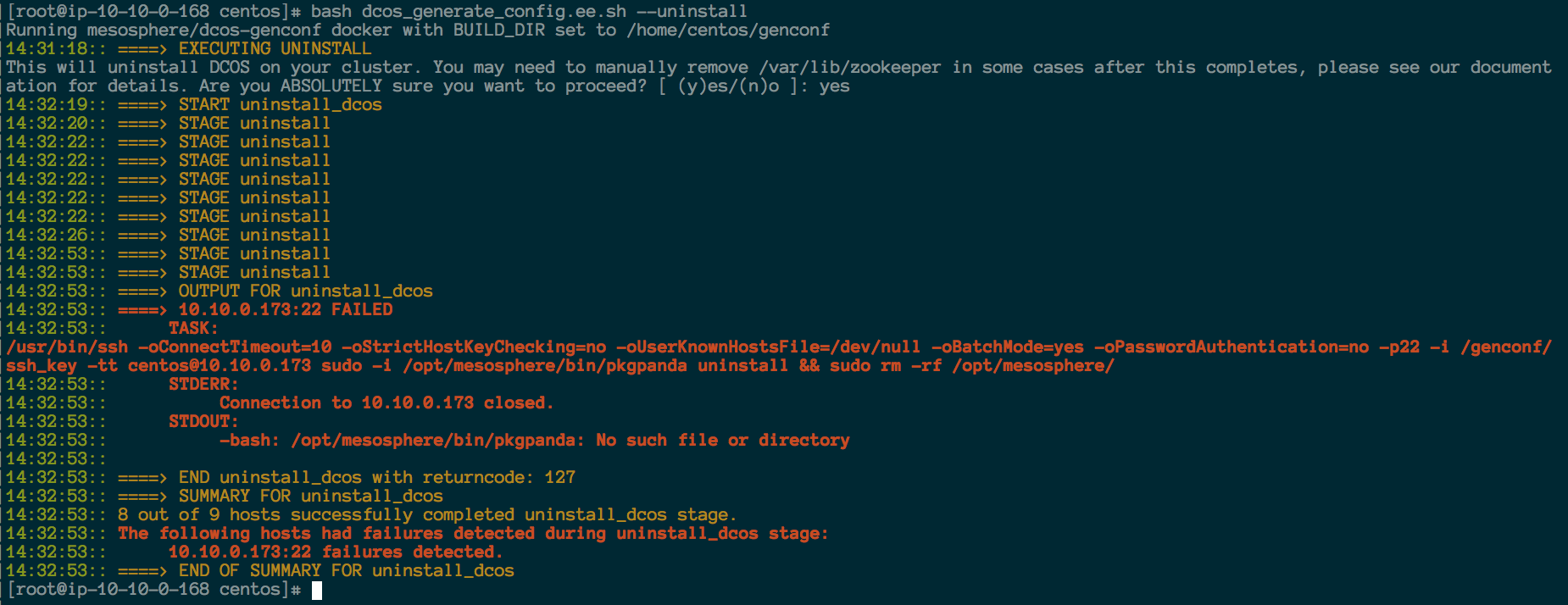

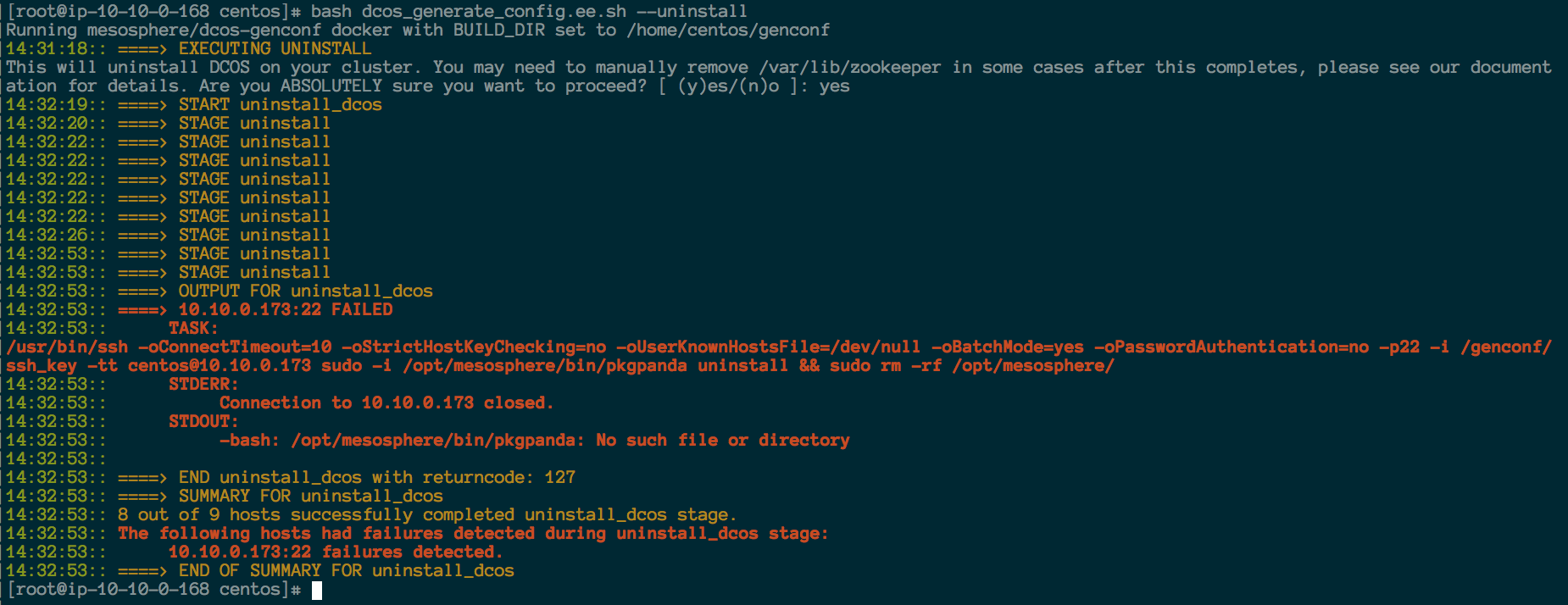

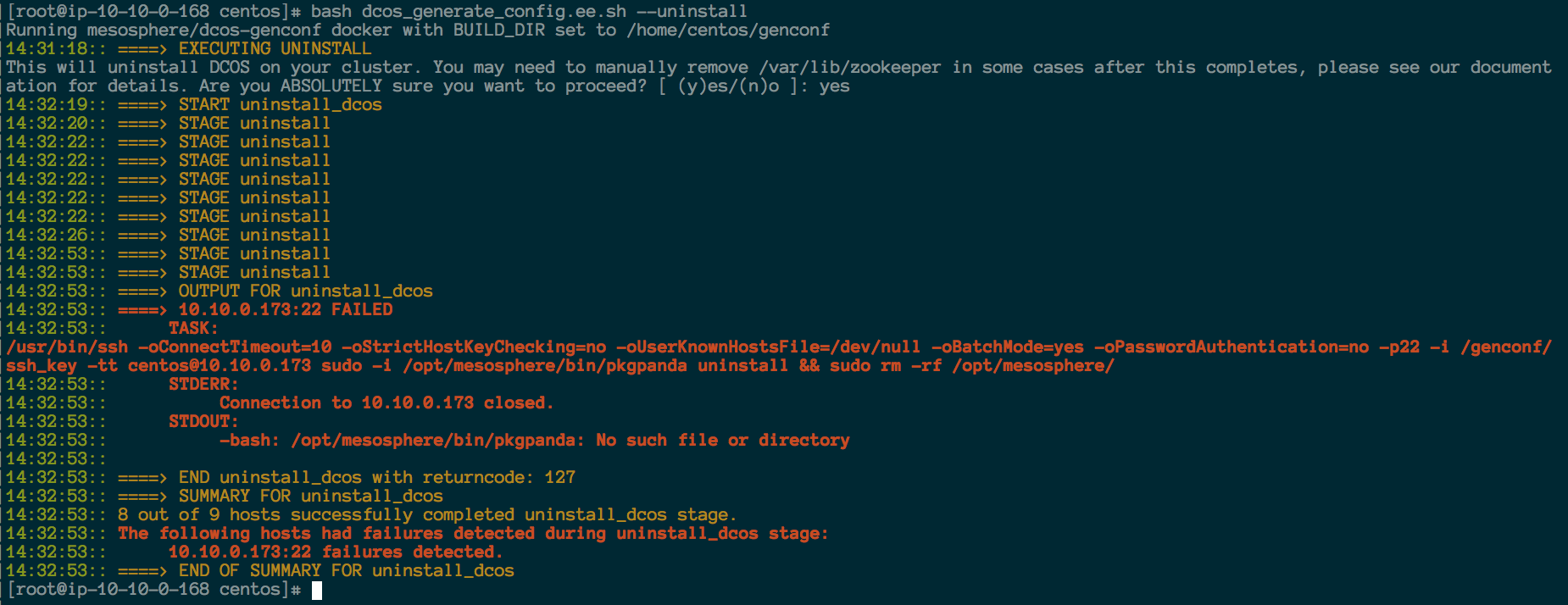

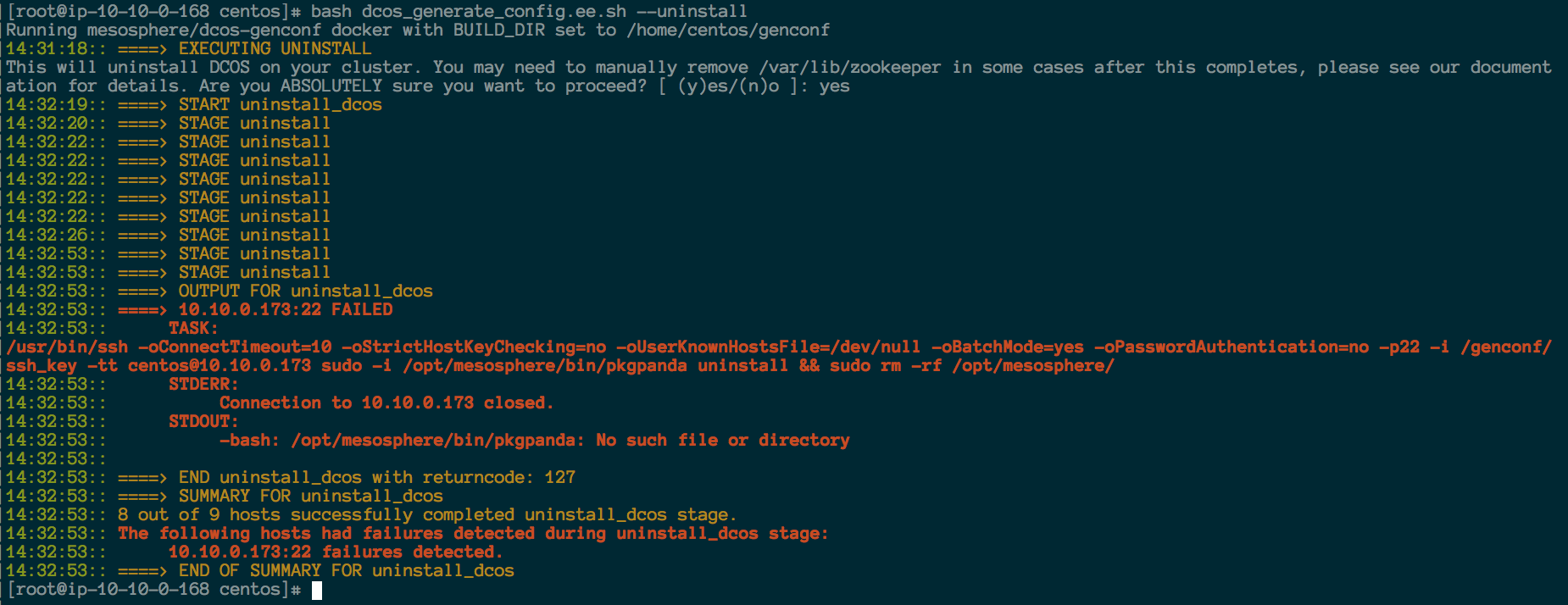

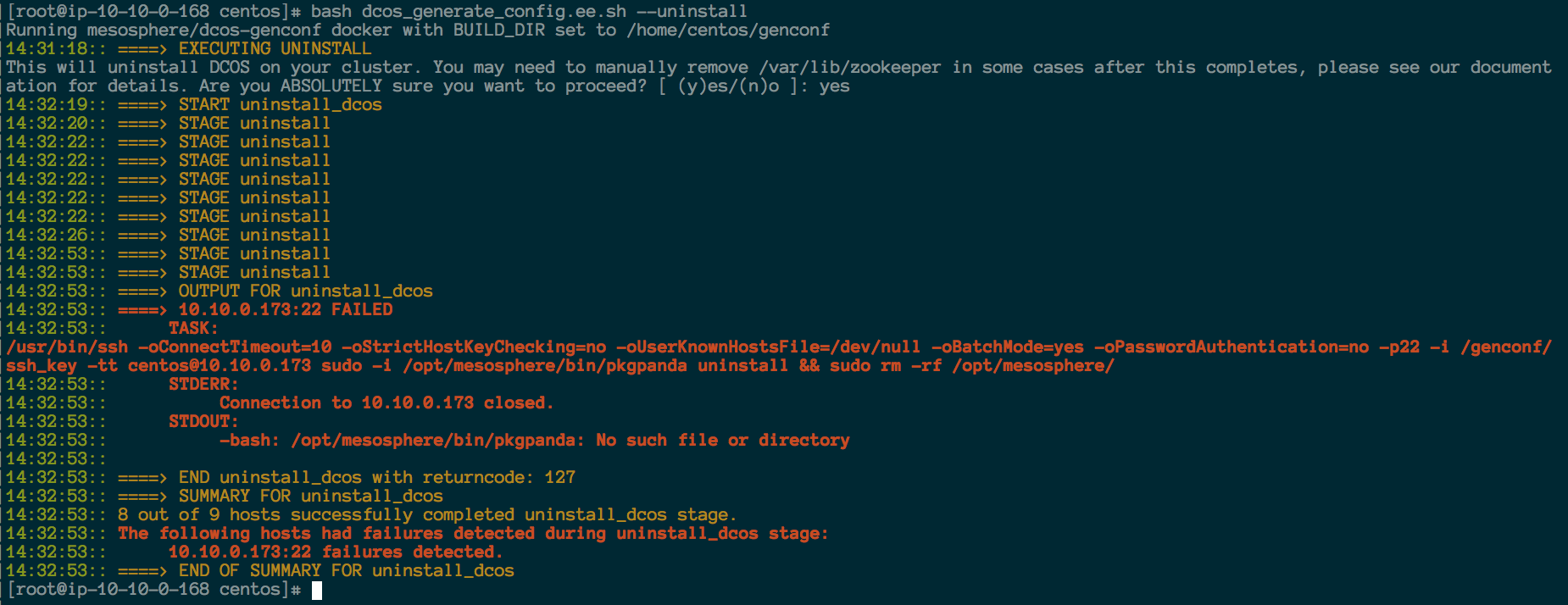

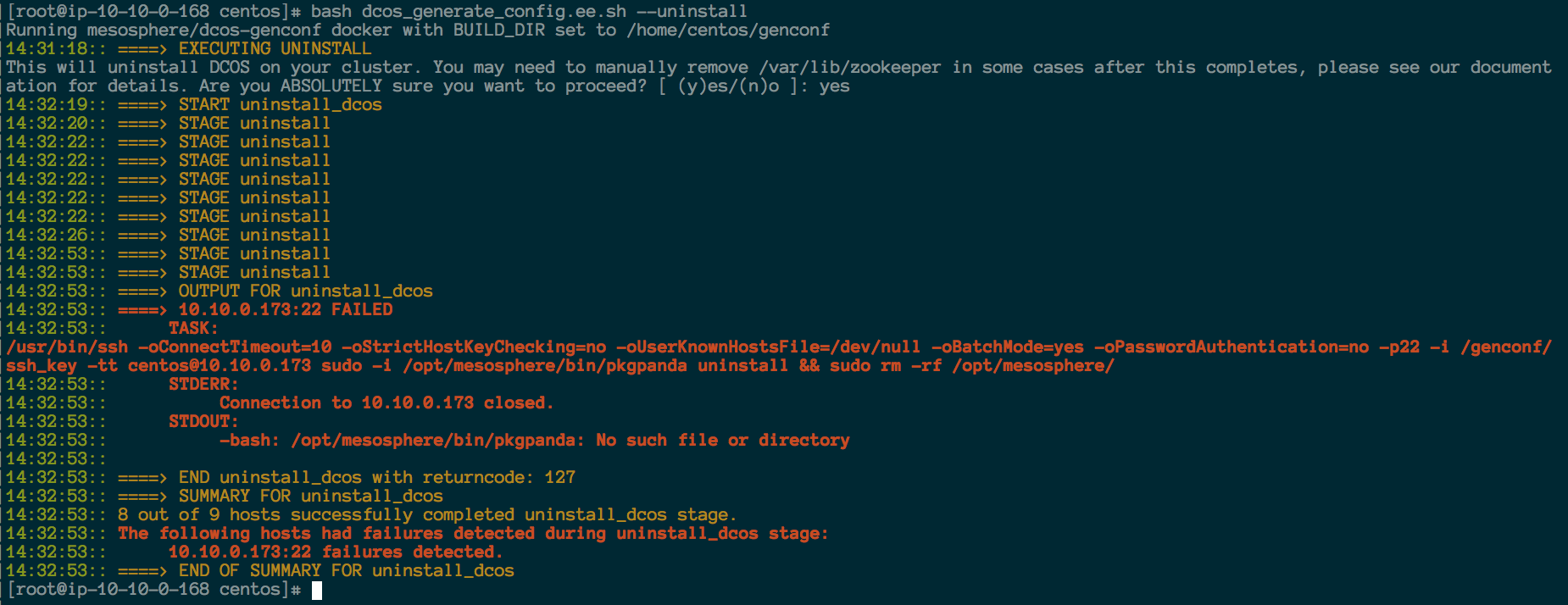

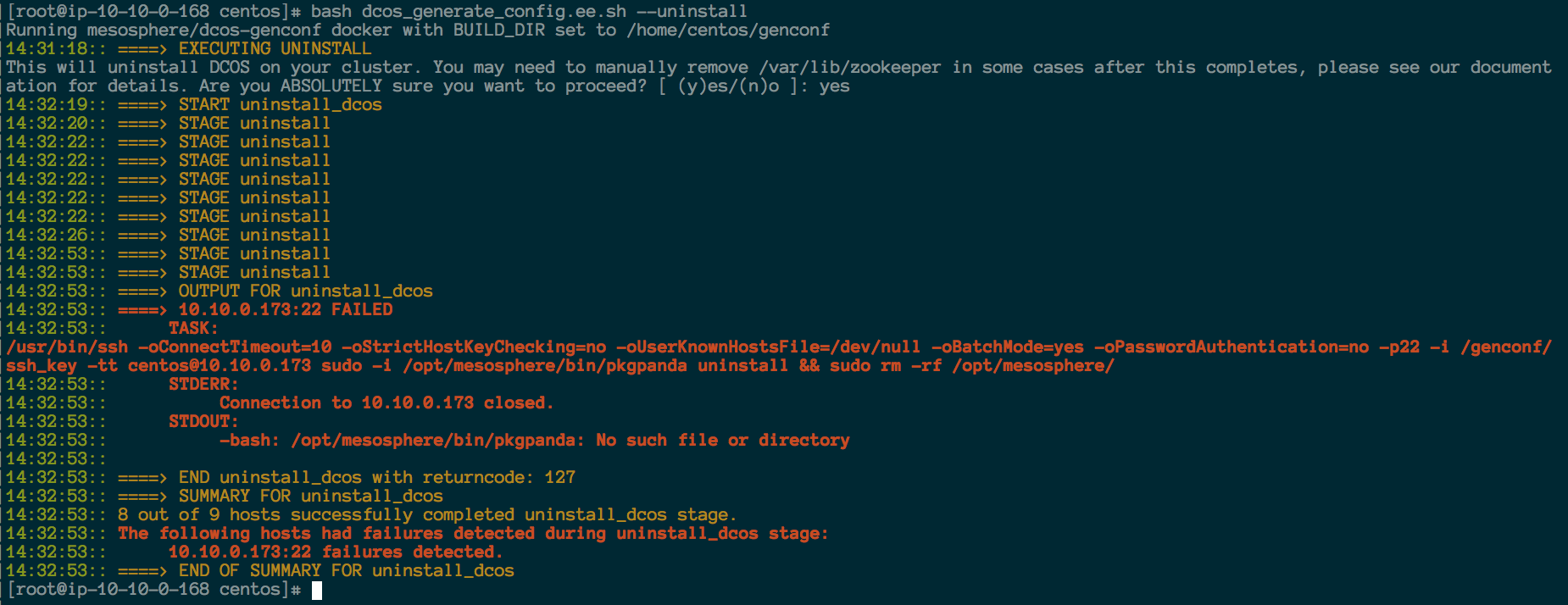

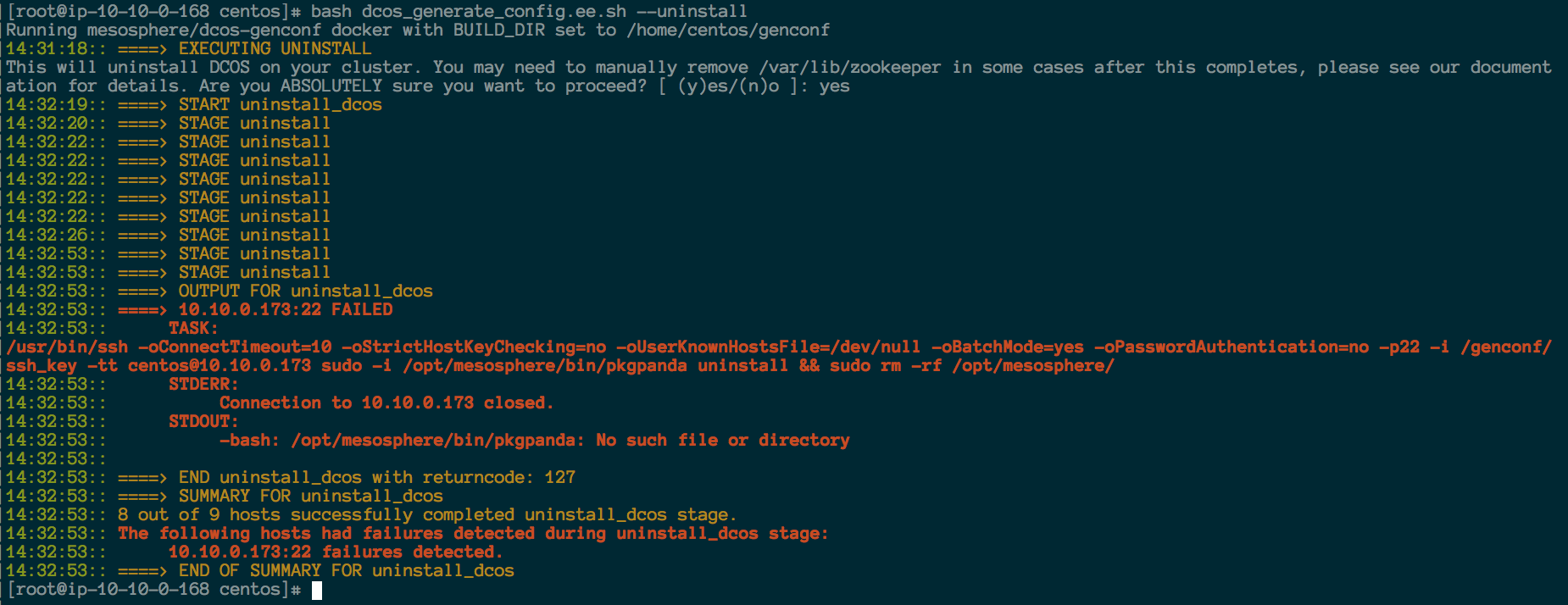

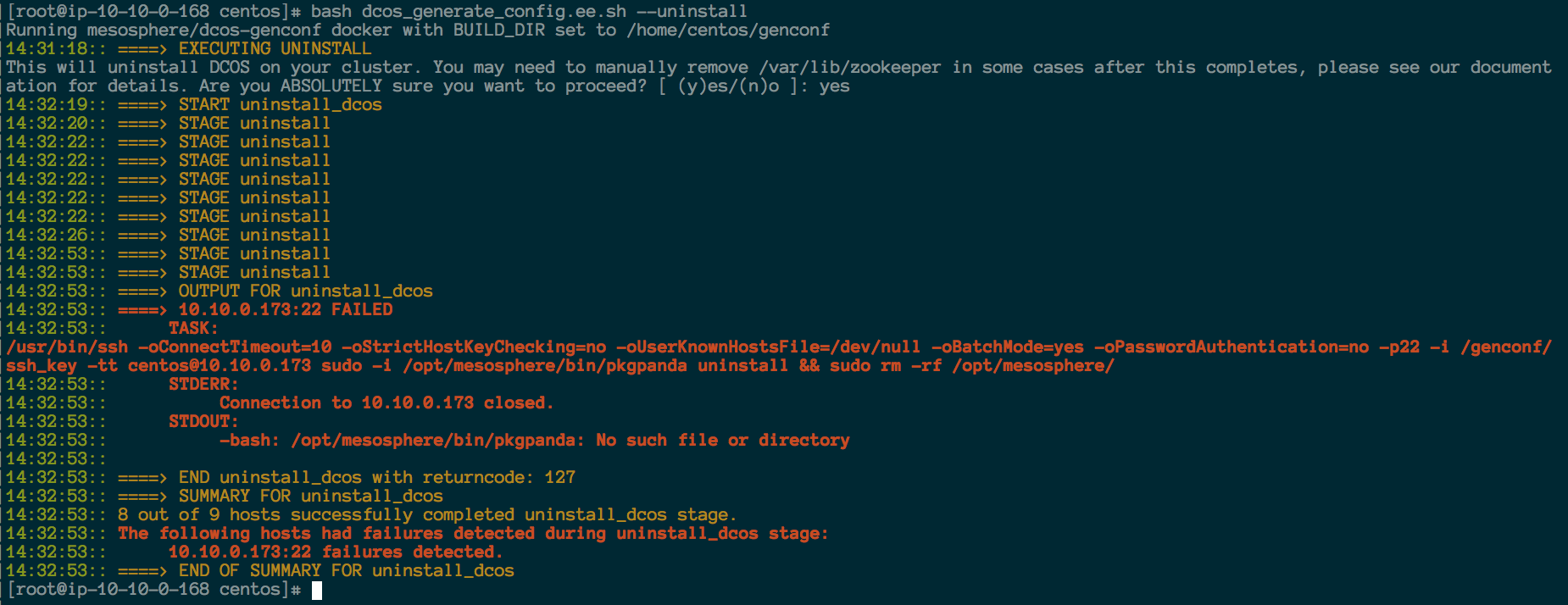

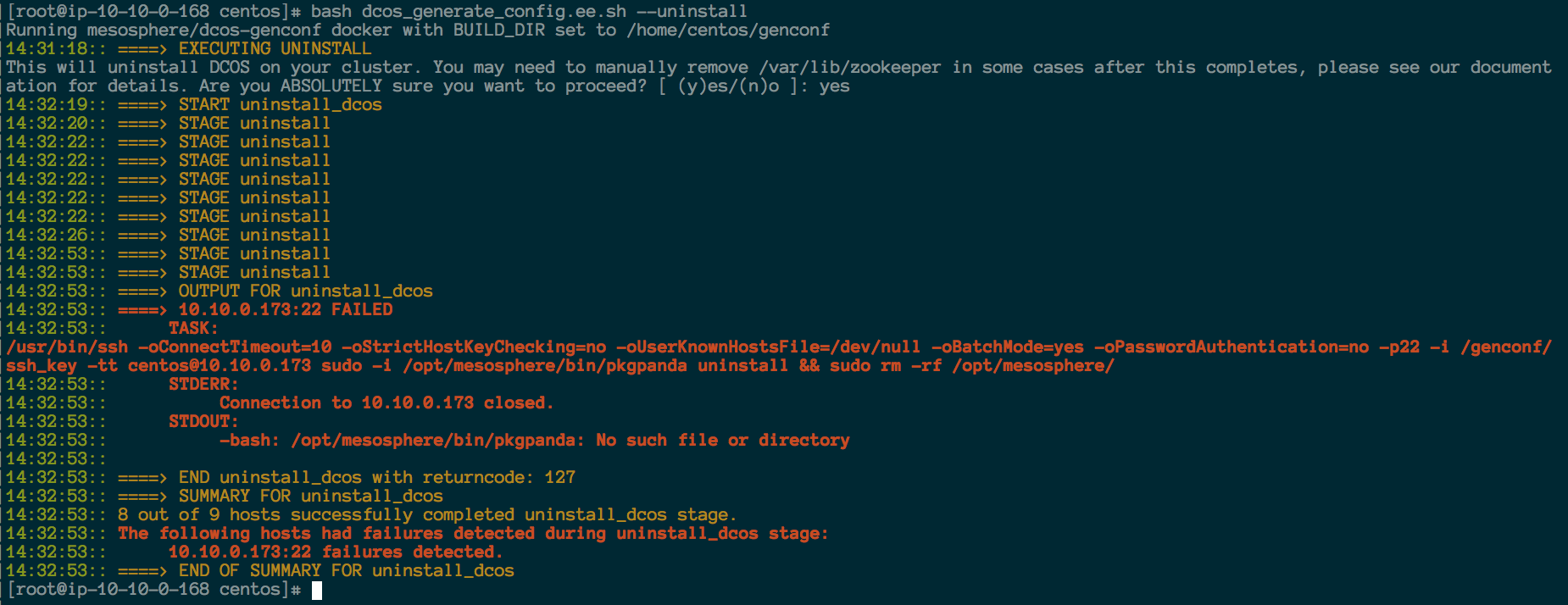

Optionally, if things go south, you can uninstall, but only after accepting the agreement:

Then:

That's it!

That's all there is to it: Consider this your one-stop shop to deploying your highly available, fault-tolerant, enterprise-scale Datacenter Operating System. We have many improvements and features we'll be adding to our installer in the very near future, which we will be sure to share here, as well.